Building pipelines with gRPC and Docker containers

In order to create a pipeline, one needs to choose the modules and execute them. The orchestrator, a GrpcMaestro will connect the modules and run your pipeline. Both the modules, as well as the orchestrator, run as Docker containers themselves.

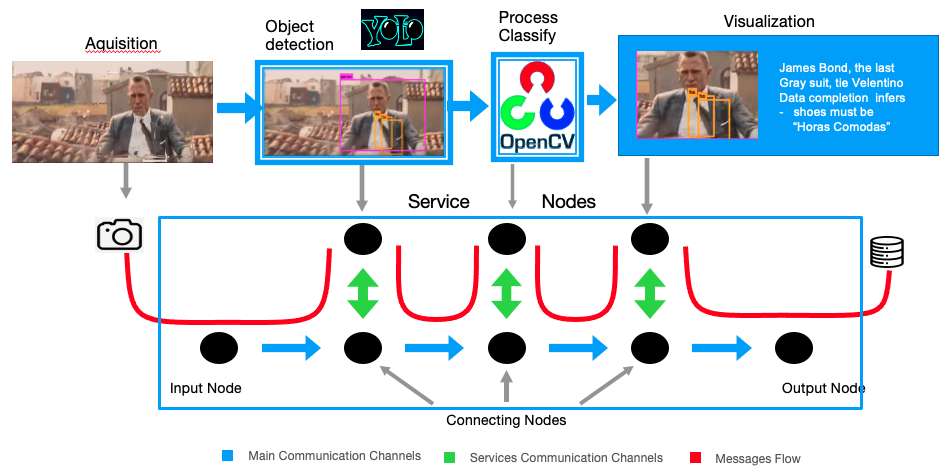

The conceptual structure is better understood with an example. Consider the task shown in the figure below, where we assume that each module is available and “ACUMOS-ready”. The flow is simple: acquire/load one image, detect objects in the image, crop the objects and classify them. The orchestrator basically connects these components passing messages between them.

To create and deploy this pipeline, provided the services exist, you just have to define the topology of the pipeline in a couple of configuration files (here for details).

Joining all the pieces

- Creating Modules : In order to create a new module, you need to define your own gRPC service, and then create a docker image with the service. See here an example of a known human pose detector configured to be a gRPC enabled service https://github.com/DuarteMRAlves/open-pose-grpc-service

- Orchestrating and deploying: Once all services are defined you can create and deploy the pipeline configuring very few parameters. Instructions and code are all here https://github.com/DuarteMRAlves/Pipeline-Orchestrator

Tag-my-outfit : Ready to run !

Tag-my-outfit was our very first pipeline that incorporates generic image processing modules together with recent research developments customized for a particular dataset. Its job is to classify the attributes of clothing in images.

See all details in the tag-my-outfit webpage The code is on GitHub pipeline but the whole system can be downloaded as a single zip file here … and run with a single command.

Resources

Presentations and document resources

If you need help or want to know details please contac:

Duarte M. Alves – duartemalves AT tecnico.ulisboa.pt